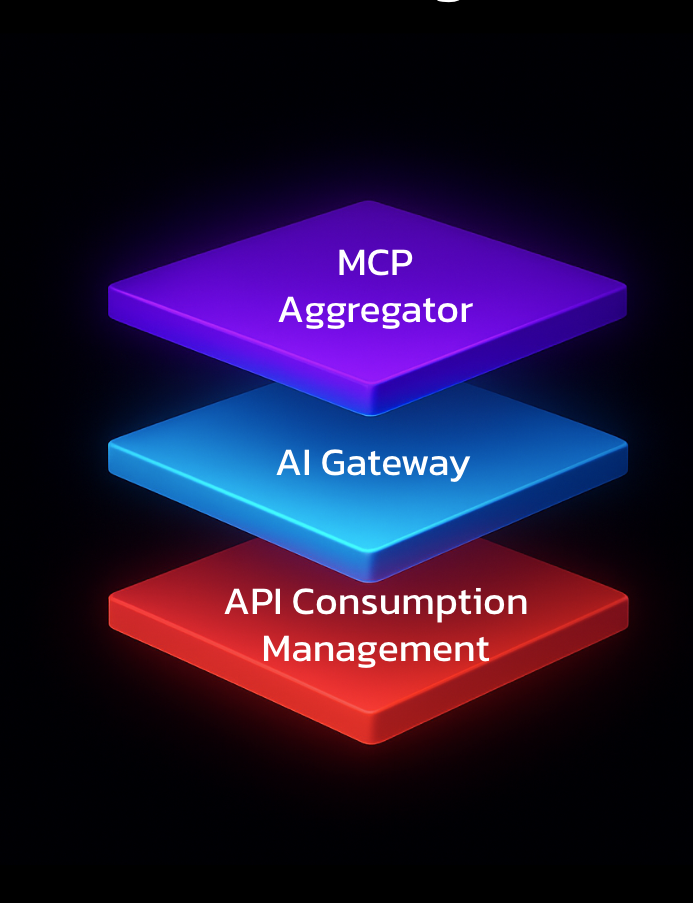

From API Consumption to Agentic Gateways: Building the Layers of AI Traffic Management

Discover how layered AI gateways are revolutionizing enterprise control, security, and governance as AI agents evolve from passive tools to autonomous actors.

When we founded Lunar.dev, we set out to solve a problem every modern software team faces: the explosion of outbound API traffic. In the early days, companies integrated dozens of third-party APIs and essentially hoped for the best. As an engineer, I remember the anxiety of hitting API rate limits, seeing unpredictable bills, and scrambling when an external API changed overnight. It was clear there had to be a better way — a way to build layers of control and visibility that could grow alongside the complexity of the systems they supported.

We began with the belief that outbound API calls deserved the same rigor, governance, and insight that inbound traffic had enjoyed for years. What emerged was not just a single product but a stack of layers, each responding to new industry shifts, customer challenges, and community feedback. Over time, these layers combined into what we now call an agentic gateway — a single control plane for all AI-driven and autonomous traffic.

Layer One: Outbound API Consumption Management

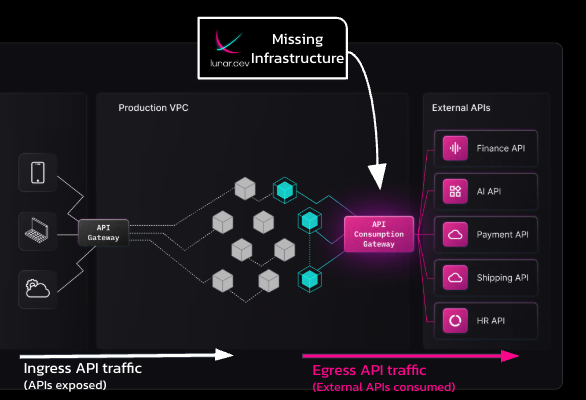

The first layer addressed a simple gap: traditional API gateways like Kong or Apigee were designed for ingress traffic, but our customers were struggling with egress — the calls their services made out to other APIs. Trying to retrofit these tools for outbound use meant messy proxy configurations, hard-to-maintain workarounds, and policy models that didn’t fit.

We flipped the model. Our focus was on API consumers, not providers. We built an egress-focused gateway designed to manage and optimize outbound calls directly. That meant introducing caching to avoid redundant requests, applying quota controls to prevent overages, and giving teams a single point of visibility for all external calls.

The impact was immediate. Instead of scattering proxy hacks across microservices, companies could point all outbound traffic through one layer. Our dashboard offered a cockpit view: which APIs were called most often, which calls failed and why, and where costs were creeping up. It turned outbound traffic from a black box into something organizations could actively manage and tune.

Layer Two: The AI Gateway Era

As this foundation matured, a new wave reshaped the landscape: Generative AI (GenAI). Practically overnight, enterprises were embedding large language models (LLMs) into co-pilots, chatbots, and automation workflows. This was not just another type of API — it was a fundamentally different traffic pattern.

LLM calls could be bursty, resource-heavy, and in some cases, unpredictable. A single user query might trigger a cascade of downstream calls. Costs could spiral out of control, and new risks emerged: prompt injection attacks, exposure of sensitive data in prompts, and compliance concerns for regulated industries.

We evolved our platform into an AI Gateway layer to address these needs. This layer introduced:

- Token-based quotas to cap runaway usage

- Prompt and output policy checks to block unsafe or non-compliant content

- Credential shielding to prevent long-lived secrets from ever reaching the LLM

- Deep tracing so teams could see the why behind each AI request

By building this layer, we gave organizations the ability to not only control AI usage but to understand it in context. For a financial services customer, this meant being able to prove that an AI assistant never accessed systems it wasn’t authorized to — while also having a detailed record of every decision and interaction.

Layer Three: The Agentic and MCP Gateway Shift

The next evolution came with agentic AI — systems where AI agents don’t just respond to human prompts but take actions, choose tools, and call APIs autonomously. The Model Context Protocol (MCP) accelerated this trend by offering a universal way for agents to interact with external tools.

Suddenly, our traffic wasn’t just apps calling APIs or apps calling LLMs. It was AI agents calling APIs through tools, often without a human in the loop. This was powerful but risky. Agents could choose the wrong tool, make high-cost calls, or access sensitive systems without clear oversight.

We extended our gateway into an MCP Gateway layer. This allowed us to:

- Authenticate agents with delegated credentials

- Enforce tool-specific policies and allow-lists

- Apply the same rate and scope controls used in other layers

- Log every step of agent-to-tool interaction

Routing MCP activity through the gateway meant customers could finally trace an AI agent’s behavior from the initial LLM decision to the final API response. This was a huge leap in visibility and governance — a building block toward full lineage, where every action is explainable and auditable.

The Layers Come Together

By this point, we could see clearly: these weren’t separate products. They were interlocking layers in a single system. Outbound API governance, AI-specific controls, and agentic/MCP enforcement each address different problems, but together they form a holistic agentic gateway.

This unified stack ensures that policies are applied consistently, no matter where a request originates. Whether it’s a front-end service making a Stripe call, a chatbot sending a prompt to OpenAI, or an agent invoking an MCP plugin, it all passes through one control plane. Observability is centralized, policies are unified, and compliance teams get a single source of truth for what happened, when, and why.

Still Building Toward the Vision

We have not yet reached the holy grail of complete lineage — tracing every AI decision from the original human prompt through every agent action and API call, with full context at each step. But we are building toward it. Each deployment, customer discussion, and industry development pushes us closer, adding more detail to the picture and more capabilities to the stack.

Like others in this space, we’re still learning and iterating. But with each new layer, we get closer to turning AI from an opaque, unpredictable actor into a well-governed, transparent part of enterprise infrastructure.

Looking Ahead

The agentic era is still young. We expect more interoperability standards, more sophisticated agents, and new categories of AI-driven automation. Our goal remains the same: provide the infrastructure that lets enterprises embrace autonomy without losing control.

That means refining policies to adapt in real time, expanding integrations with AI development platforms, and deepening our lineage capabilities so organizations can not just observe AI behavior but understand and trust it. The layers we’ve built so far are the foundation — the control cockpit from which enterprises can safely scale AI across their operations.

As AI agents take on more critical roles, our job is to ensure the infrastructure behind them is as adaptive, reliable, and intelligent as the agents themselves.

Ready to Start your journey?

Manage a single service and unlock API management at scale

.png)

.png)