Granular Control for AI Traffic, Built for Production

AI-driven apps demand a smarter gateway.

Lunar.dev’s AI Gateway provides granular controls for all AI-generated API traffic.

Take AI Faster to Production

Full Observability Into AI powered workflows

Track every LLM call and tool action with full visibility into token usage, cost, latency, and errors. From agent decisions to triggered tools and MCP servers, Lunar gives you complete insight to optimize performance and control spend.

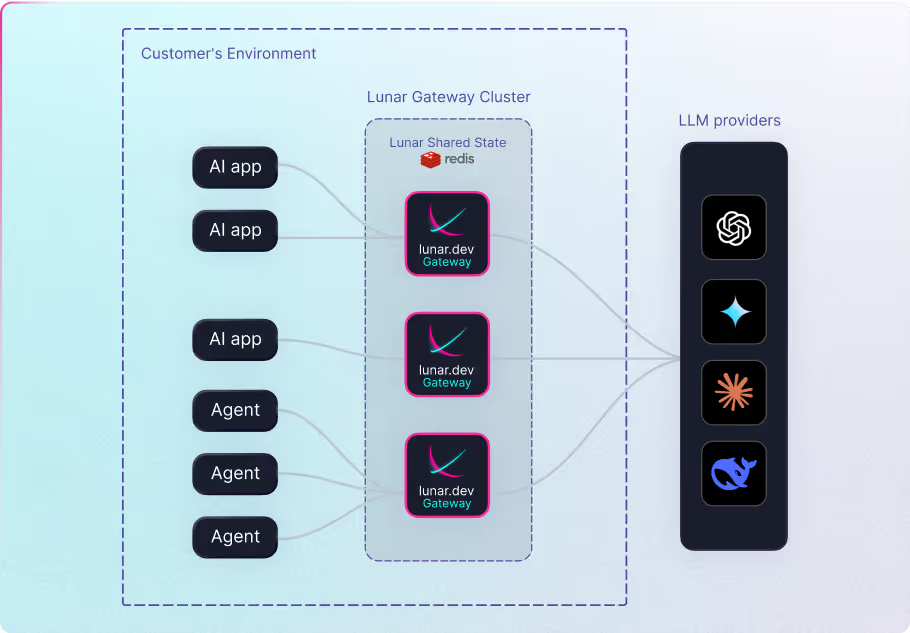

Production-grade AI Gateway infrastructure

Lunar is built for scale, resilience, and control. Deploy as a self-hosted cluster with minimal added latency, full tenant control, and the capacity to endure massive volumes of AI traffic. It’s the infrastructure you need to move GenAI from pilot to production with confidence.

Smart routing and fallback for resilient AI operations

Our gateway clusters share state information, ensuring continuity of service. API calls are intelligently load-balanced across the cluster, maximizing performance and scalability. This ensures your integrations can handle even sudden spikes in traffic without compromising uptime or performance.

Granular Controls for LLM Workloads

Rate Limiting for LLM Traffic

Set limits on AI API calls per user, app, or agent to prevent overuse and avoid hitting provider rate caps

.avif)

Priority Queue for AI Workloads

Prioritize critical LLM requests—like production user queries—over background or non-urgent agent traffic

Data Sanitation for Prompt Safety

Redact or filter sensitive data from AI prompts and tool inputs before they’re sent to LLMs or APIs

LLM Model Routing

Dynamically route LLM calls across providers (e.g. OpenAI, Claude, Gemini) based on task type, token usage, or cost

Prompt & Payload Transformer

Modify prompts, tool calls, or response payloads in-flight to optimize how agents interact with LLMs

Custom AI Metrics Collection

Track custom KPIs—like token usage per agent, error rates, or model cost efficiency—across your entire AI pipeline

Secure Your GenAI Stack with Real Enforcement

Lunar’s AI Gateway brings production-grade policy enforcement to your outbound AI traffic—built to handle what OWASP calls out as critical LLM risks.

Unbounded Consumption

Prevent runaway costs and outages with token-level rate limiting, quota enforcement, and priority-based request controls across all AI providers.

Excessive Agency

Restrict what agents can do with fine-grained access controls, including per-tool policies, scoped permissions, and human-in-the-loop gating for sensitive actions.

Prompt Injection

Shield downstream APIs from adversarial prompts by inspecting, validating, and filtering all outbound traffic—before it ever hits the model.

Sensitive Information Disclosure

Protect your data by enforcing redaction, header stripping, and outbound payload auditing to prevent accidental leakage into LLM prompts or completions.

Additional Reources

Bringing Your AI App to Production?

Easy setup. Complete control. Everything you need, out of the box.