.png)

MCP Prompts at Runtime: How Agents Reason, Execute, and Stay Accurate

MCP Prompts are more than reusable templates. In production agent systems, they act as runtime control surfaces that shape reasoning, tool selection, and safety. As MCP ecosystems scale, prompts become a governance and security concern, not just a developer convenience. This post explains how MCP Prompts influence agent execution and why a gateway layer like Lunar MCPX is required to scope tools, enforce workflows, and govern prompts safely at scale.

MCP Prompts are often introduced as reusable prompt templates, but in real agent systems, they play a much deeper role. At runtime, MCP Prompts shape how an agent reasons, how it scopes its actions, and how safely it interacts with tools and systems.

This post looks at MCP Prompts through the lens of agent execution, not just protocol definitions. We will first explain how MCP Prompts affect agent behavior, then show why prompts become a governance and security problem at scale, and finally explain how Lunar’s MCP Gateway supports prompts at two distinct layers, unlocking accuracy, safety, and reuse.

MCP Prompts as Runtime Control Surfaces

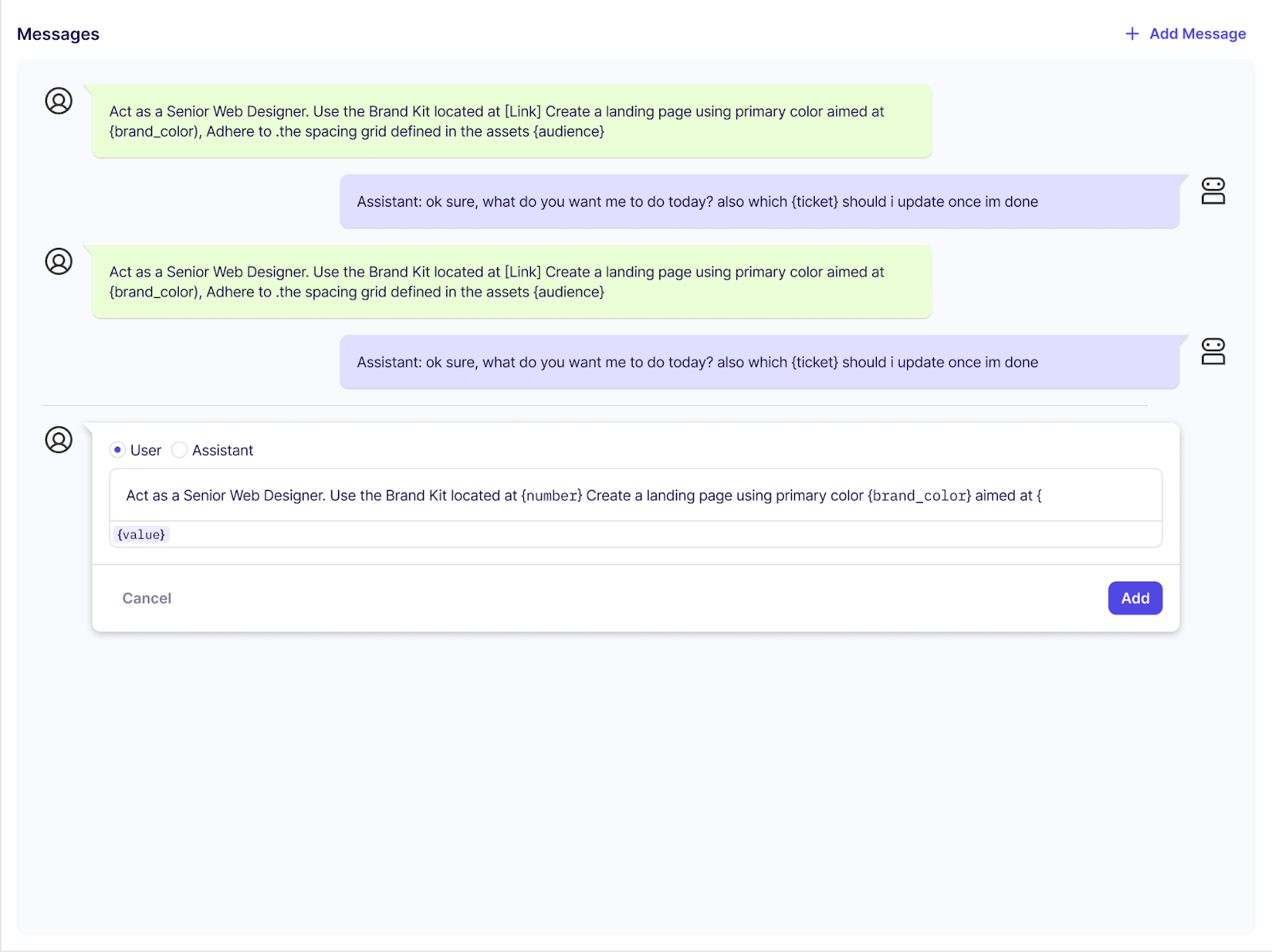

At a protocol level, an MCP Prompt is a server-defined artifact that can be discovered and retrieved by a client. At runtime, however, it functions as a control surface for agent behavior.

When an agent invokes a prompt, it is not simply receiving text. It is being placed into a specific reasoning mode. The prompt instructions bias how the model interprets input, how it weighs tool descriptions, and how it plans subsequent actions.

This is an important distinction. Free-form chat input requires the model to infer intent from natural language. MCP Prompts, by contrast, encode intent explicitly. The agent does not need to guess what kind of task it is performing. That intent is already embedded in the prompt definition.

This is why MCP Prompts tend to produce more predictable and repeatable outcomes than ad hoc prompting.

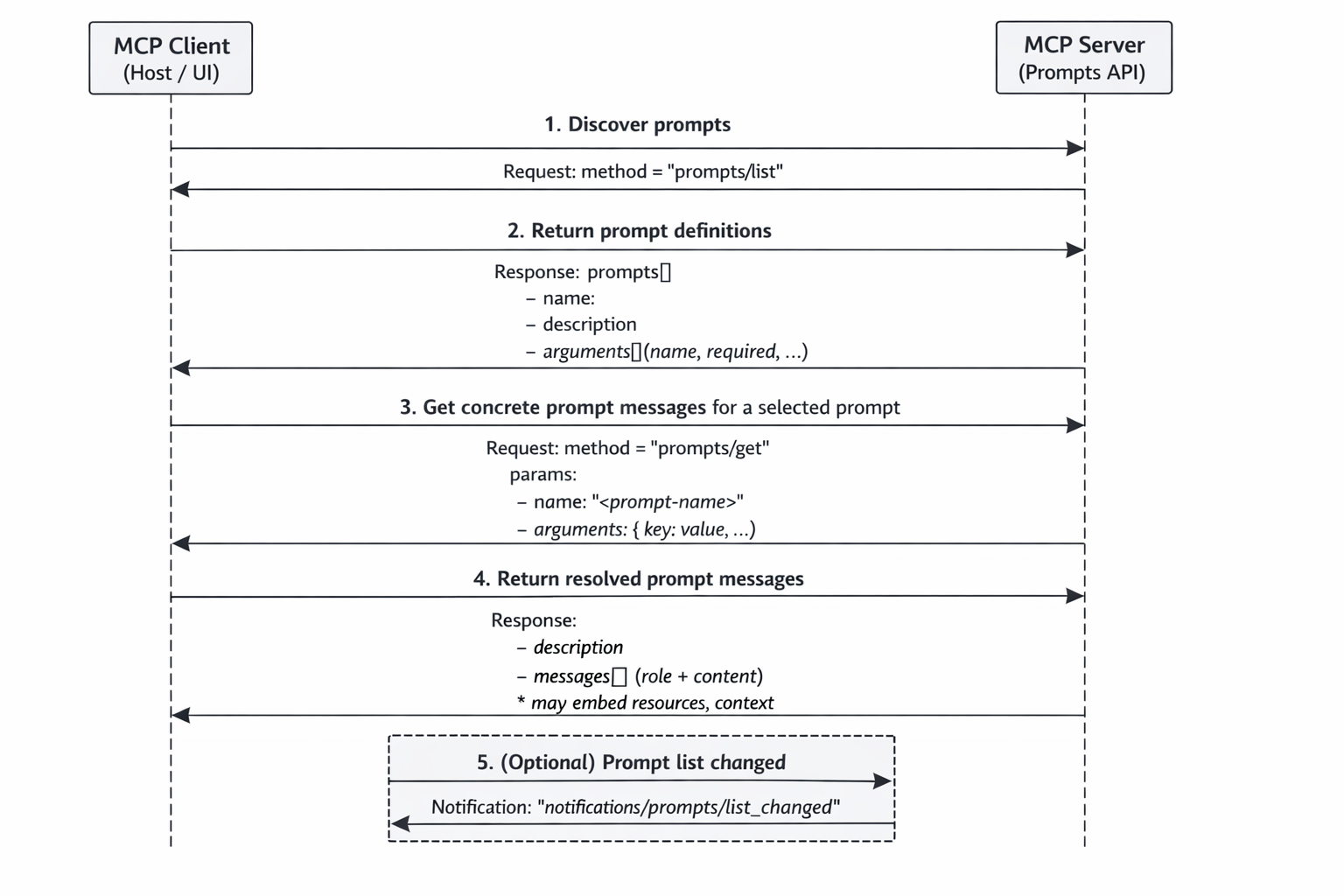

Runtime Flow of an MCP Prompt

From the agent’s point of view, prompt execution follows a deterministic flow:

The client first retrieves the list of prompts exposed by an MCP server by calling prompts/list. Once a prompt is selected, the client calls prompts/get with the required arguments. The server responds with a structured set of messages that are injected directly into the model context.

For example:

{

"name": "analyze_config_risk",

"arguments": {

"config": "<service configuration>"

}

}

At runtime, the agent now reasons under the assumption that it is performing a risk analysis task. That assumption influences everything that follows, including which tools the agent considers relevant and how it interprets their descriptions.

How Prompts Affect Tool Selection

One of the most important, and subtle, effects of MCP Prompts is how they influence tool selection.

In MCP-based systems, tool definitions are injected into the model’s context. The agent reasons over the prompt instructions and the available tool descriptions simultaneously. This means prompts and tools are tightly coupled in the agent’s reasoning loop.

A well-scoped prompt biases the agent toward the correct tools. A poorly scoped prompt, combined with a large set of tools, forces the model to choose among many irrelevant options. As the number of tools grows, this leads to slower reasoning, higher token usage, and a higher probability of incorrect tool invocation.

This is not just a performance issue. It is also a safety issue. When agents see tools they should not use, mistakes become more costly. As MCP ecosystems scale, tool overload becomes one of the primary failure modes of agent systems.

This is where gateways, and specifically Lunar MCPX, begin to play a critical role.

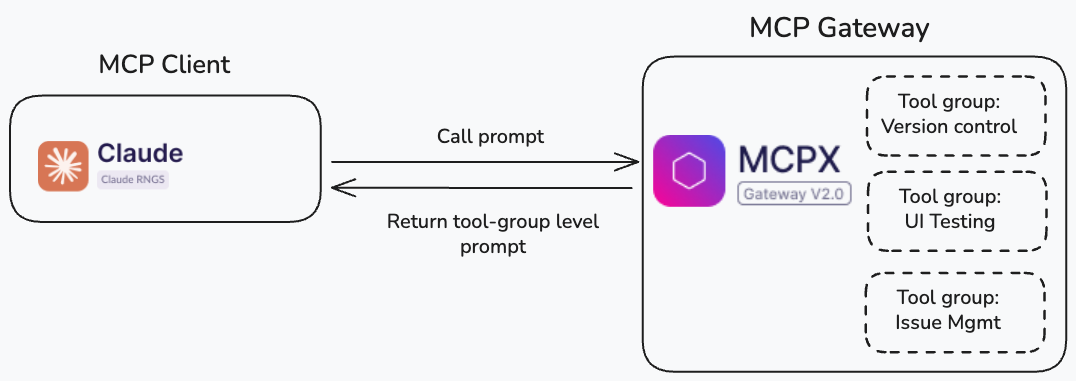

MCPX-Level Support for MCP Prompts

Lunar MCPX supports MCP Prompts at two distinct levels. The first is native prompt support at the gateway itself, where prompts are defined, scoped, and enforced centrally.

Gateway-Native Prompts, Tool Groups, and Accuracy

When prompts are defined at the MCPX gateway level, they can be directly combined with Tool Groups. Tool Groups are curated subsets of tools that are exposed to an agent for a specific prompt or workflow.

At runtime, this means that when a prompt is invoked, MCPX activates a specific Tool Group and exposes only the relevant tools to the agent. The agent no longer reasons over the entire tool universe, but over a small, purpose-built set aligned with the prompt’s intent.

This dramatically improves agent accuracy. Tool selection becomes deterministic rather than probabilistic. Latency and token usage drop because the context is smaller. Most importantly, the blast radius of agent mistakes is reduced, because tools outside the group are simply not available.

In this model, prompts define what the agent is trying to do, while Tool Groups define what the agent is allowed to do. MCPX enforces both before the model is even invoked.

Tool Chaining and Multi-Step Workflows

Many MCP Prompts implicitly initiate multi-step workflows. A single prompt may require multiple tool calls, chained together as the agent reasons through the task.

Without infrastructure support, this relies entirely on the model’s ability to plan and execute steps probabilistically. MCPX allows these workflows to be anchored at the gateway. Prompts can be associated with predefined execution constraints and tool group boundaries, ensuring that chaining occurs within known limits.

This shifts responsibility away from the model and into infrastructure, where workflows can be tested, audited, and enforced. The agent still reasons, but it reasons within guardrails.

Governing Prompts from External MCP Servers

The second level of MCP Prompt support in MCPX is governance over prompts exposed by external MCP servers.

As the MCP protocol matures, more servers are beginning to expose prompts as first-class features. We already see this in developer tooling servers, internal automation servers, and platform services that expose prompts for common operations. As MCP Resources gain wider adoption, this trend will accelerate.

This evolution means that MCP Gateways must also evolve. A gateway that only proxies tool calls is no longer sufficient. It must understand more layers of the MCP protocol, including prompts and (soon resources).

MCPX allows organizations to inspect, review, and govern prompts exposed by external MCP servers. This enables a more holistic gateway that understands how agents are instructed, not just what APIs they call.

Security and Risk Evaluation of MCP Prompts

From a security perspective, prompts are an attack surface. Prompt injection can occur through arguments, resources, or tool output that is embedded into prompt messages. Over-permissioned prompts can allow agents to invoke tools they should not have access to.

MCPX addresses this by running validation and policy enforcement on prompts before execution. Prompt content can be inspected for unsafe instruction patterns. Argument schemas can be validated. Prompts can be restricted to specific roles, environments, or tool groups.

In addition, MCPX provides visibility and auditability. Teams can see which prompts exist, who uses them, and what tool actions they trigger. Over time, prompts can be shared as approved enterprise resources rather than ad hoc instructions scattered across agents.

Why MCP Prompts Call for a Gateway Layer

MCP Prompts make agents more capable, more predictable, and easier to reason about. They encode intent explicitly and reduce ambiguity in agent behavior.

At the same time, they introduce new governance challenges. Prompts influence tool selection, workflow execution, and security posture. As MCP adoption grows, these concerns cannot be handled at the agent level alone.

This is why MCP Prompts naturally call for a gateway governance layer. Lunar MCPX fills this role by supporting prompts natively, combining them with tool scoping and chaining, and governing prompts exposed by external MCP servers.

Prompts help agents do the right thing.

Gateways ensure they are only able to do the right thing.

As MCP continues to mature, this separation of concerns will become foundational for building reliable, production-grade agent systems.

TL;DR: MCP Prompts and the Gateway Layer

MCP Prompts are more than templates; they are runtime control surfaces that explicitly define an agent's intent, leading to predictable and accurate behavior.

Key Takeaways:

- Intent & Accuracy: Explicit prompts ensure predictability and improve agent reasoning/planning.

- Tool Scoping: Without guardrails, agents struggle with a vast "tool universe."

- The Gateway Solution (MCPX): Lunar MCPX uses Gateway-Native Prompts and Tool Groups to limit context, ensuring deterministic tool selection, higher accuracy, and reduced safety risk.

- Governance & Security: The gateway centrally inspects and enforces policies on all prompts, mitigating prompt injection and ensuring agents operate within defined permissions.

Ready to Start your journey?

Manage a single service and unlock API management at scale

.png)